How to Improve Webpage Performance with In-Depth Content Analysis

A couple months ago we launched our Technical SEO Audit for Webflow Websites Service. We put together a killer landing page, and I even wrote an article about how we optimized it for SEO. Here’s the honest truth: that landing page has attracted exactly one visit from Google—one unpaid organic click. It’s almost embarrassing to admit, but I value transparency far too much to pretend otherwise. That’s why, today, I’m calling in some heavy-weight reinforcements—Search Atlas.

Search Atlas is an all-in-one, AI-driven SEO platform that centralizes and automates everything related to search optimization: keyword research, technical audits, backlink analysis, local and ecommerce SEO, and semantic content creation. At the end of 2024, they added, and then gradully improved, a powerful new feature to their toolkit—SCHOLAR.

SCHOLAR stands for Semantic Content Heuristics for Objective Language Assessment and Review. It evaluates both AI-generated and human-written website content, using metrics directly tied to Google’s ranking factors. According to Search Atlas, these measured signals closely reflect how Google judges content quality.

It’s designed to objectively and algorithmically grade content quality, mirroring the standards Google uses to evaluate web pages. Its scoring is backed by robust correlations with what actually ranks at the top of Google, so the results may be even more predictive of real SERP performance than traditional authority benchmarks. SCHOLAR aims to help users “reverse engineer” Google’s ranking logic and refine their content accordingly.

Why does that matter? Because a page’s quality—multiplied by its ranking signals—is what determines where it lands in search results. Even a site with stellar domain authority, topical relevance, and excellent user experience can get dragged down by low page quality. SCHOLAR helps to measure that quality and guide its users to improve it.

Disclaimer: This post is not sponsored by or affiliated with Search Atlas in any way. I recently purchased a subscription for my own use and am sharing my genuine experiences and findings as I explore the tool. Everything you’ll read here comes from hands-on testing—no promotions, just honest insights.

So, how does the content on my landing page stack up according to SCHOLAR? It’s time to find out.

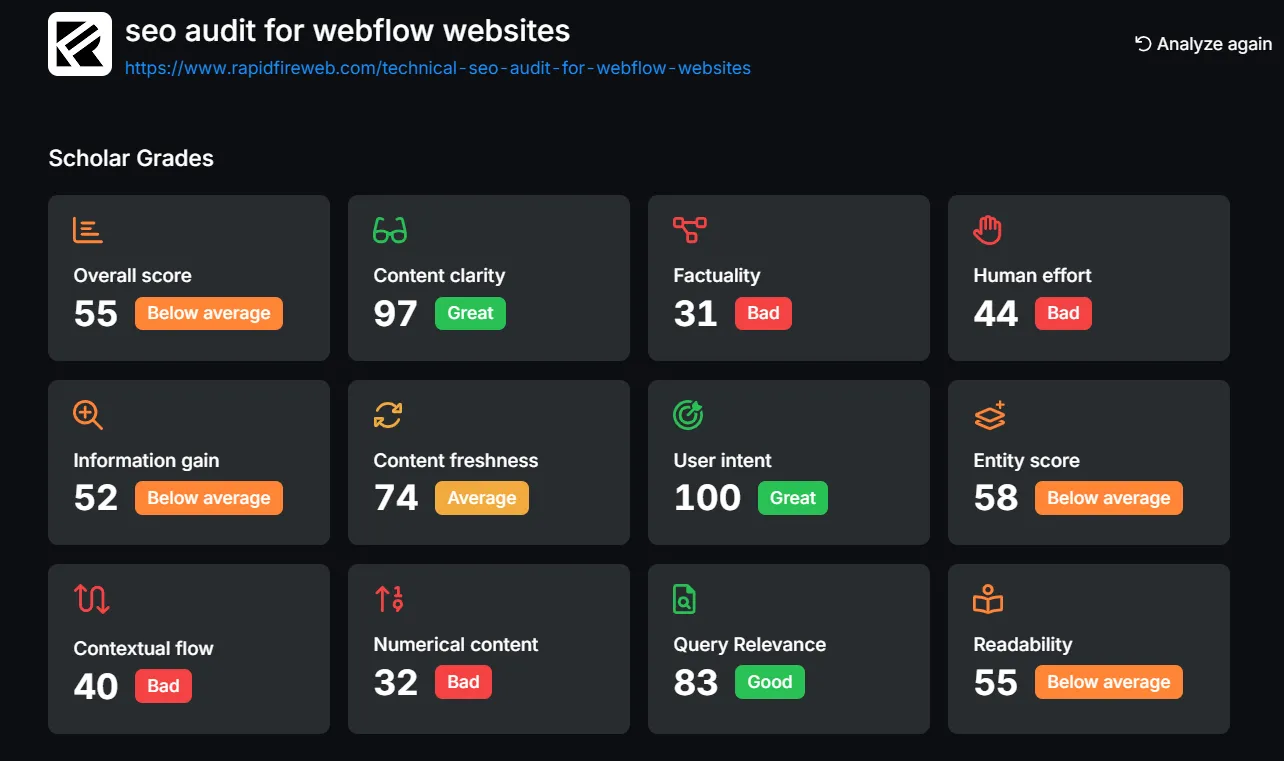

Seeing the Overall Results

After I hit “Analyze,” SCHOLAR wasted no time delivering grades for my page. And let’s just say—the overall score is disappointing. According to SCHOLAR’s own verdict, my landing page is “below average.” We managed to score well in a few areas, but there were definitely some rough spots too. And yes, I started saying “we” —I guess I feel personally judged by that score! I’ll try not to take it to heart as I dig into the details.

Important note: SCHOLAR doesn’t just analyze the page in general—you need to provide a keyword or a keyword group for it to analyze against. Some metrics will be heavily affected by your choice of keyword, while others won’t. For my analysis, I used the long keyword chain “SEO audit for Webflow websites.”

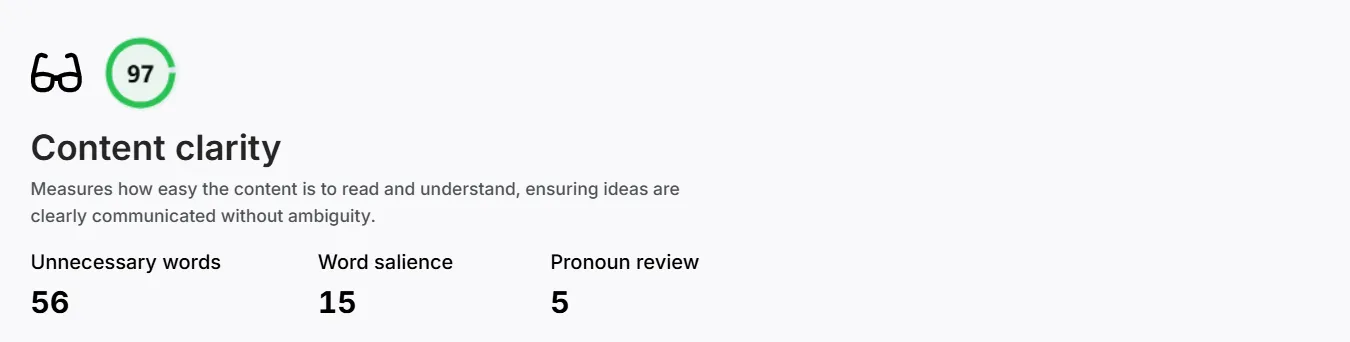

Content Clarity

The content clarity metric is all about keeping your page free of unnecessary words and fluff. The goal is to reduce excess text while boosting factual content and genuine value for both users and search engines. According to SCHOLAR, my landing page is solidly in the green zone, scoring a strong 97 for clarity. It did flag 56 unnecessary words—which I think is pretty tolerable, considering the high score. Unfortunately (or perhaps thankfully), SCHOLAR doesn’t provide a detailed list of those words, so you’ll have to take its word for it.

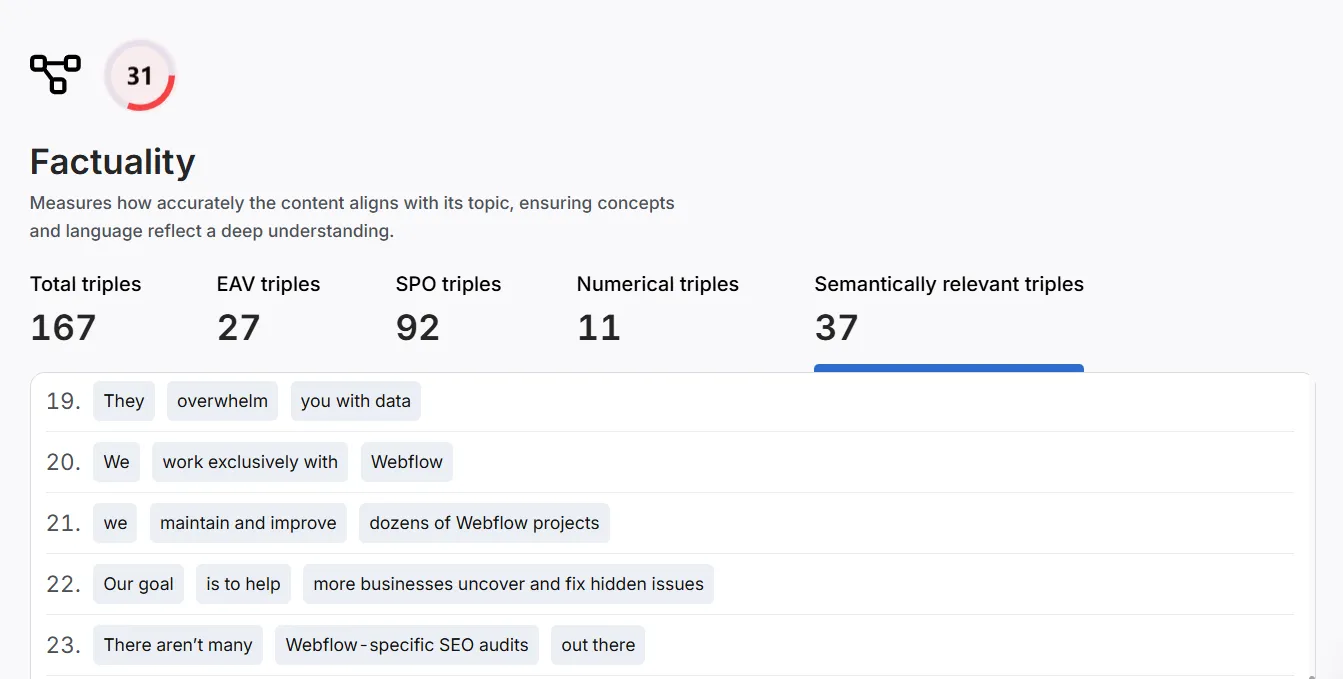

Factuality

This was the worst score the page received. Just 31 points and red badge "Bad".

According to the Search Atlas team, Google values content grounded in actual facts. If a page lacks useful information and is instead padded with fluff, it’s unlikely to rank well. The key here is to make your content factually relevant to the user’s search intent.

Anylizing our page I see that instead of saying, “More tailored audits exist, but they’re often limited or wildly expensive,” we could go with, “More tailored audits exist, but they’re often limited or their price varies from $500 to $2,500.” That range is still broad, but it’s undeniably more concrete than just “wildly expensive.”

Looking closer at my Search Atlas report, the page contains 167 total triples, but only 27 are Entity-Attribute-Value triples and just 11 are numerical triples. The number of semantically relevant triples is only 37—and I suspect these are the most important, since they tie directly to what users are actually looking for. It’s clear that I need to focus on increasing this number.

My takeaway and action plan for improving factuality? Add more firm, affirmative statements describing what our audit actually delivers. I plan to gather stats on our audits, like the average number of issues discovered and real-world results after fixes, and build those concrete details into the page.

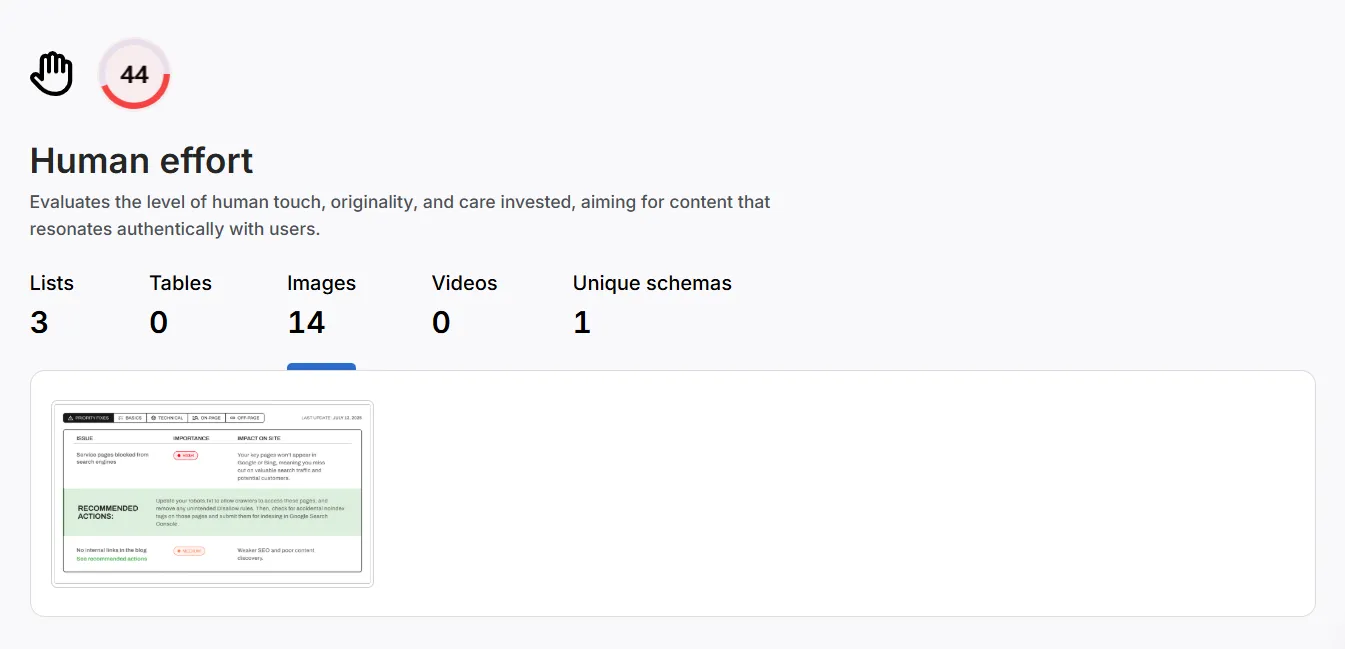

Human Effort

With a score of 44 and a badge that literally says “bad,” I’m not going to lie—I’m a bit offended. I spent a good chunk of time crafting the content for that page, and effort really does mean Effort with a capital E. Sure, I ran everything through ChatGPT to catch grammar mistakes (English isn’t my first language), but I can't accept that this final polish tanked the score so much.

So, I dug deeper to figure out what Search Atlas really means by “human effort” and how it’s measured. Surprisingly, it’s not about tone, style, or the language itself. It’s mostly about the presence of elements that go beyond plain text—think lists, tables, images, videos, and schema markup. Personally, I’m not convinced “human effort” is the best label for this metric. LLMs abuse lists and charts all the time. To me, the real “human touch” comes from features LLMs can’t generate convincingly—like authentic quotes, personal anecdotes, custom photos, and original illustrations. Machines can fake some of these, but they really shouldn’t. I don’t support passing off AI-generated content as truly human.

For Rapid Fire’s SEO audit landing page, we’ve got 3 lists (actual HTML lists and not just stylized text), zero tables, zero videos, and one schema. SCHOLAR claims we have 14 images, but faitly only the main illustration is shown—the rest are icons or purely decorative.

My takeaway? I can add a chart (no clue what kind just yet) and, most importantly, testimonials from real clients—something I’ve been meaning to do anyway. I’m holding off on video for now, but it’s definitely on the radar. As for schema markup, we’ve added a service schema and could add an FAQ schema, though I’m honestly skeptical about how much it really helps.

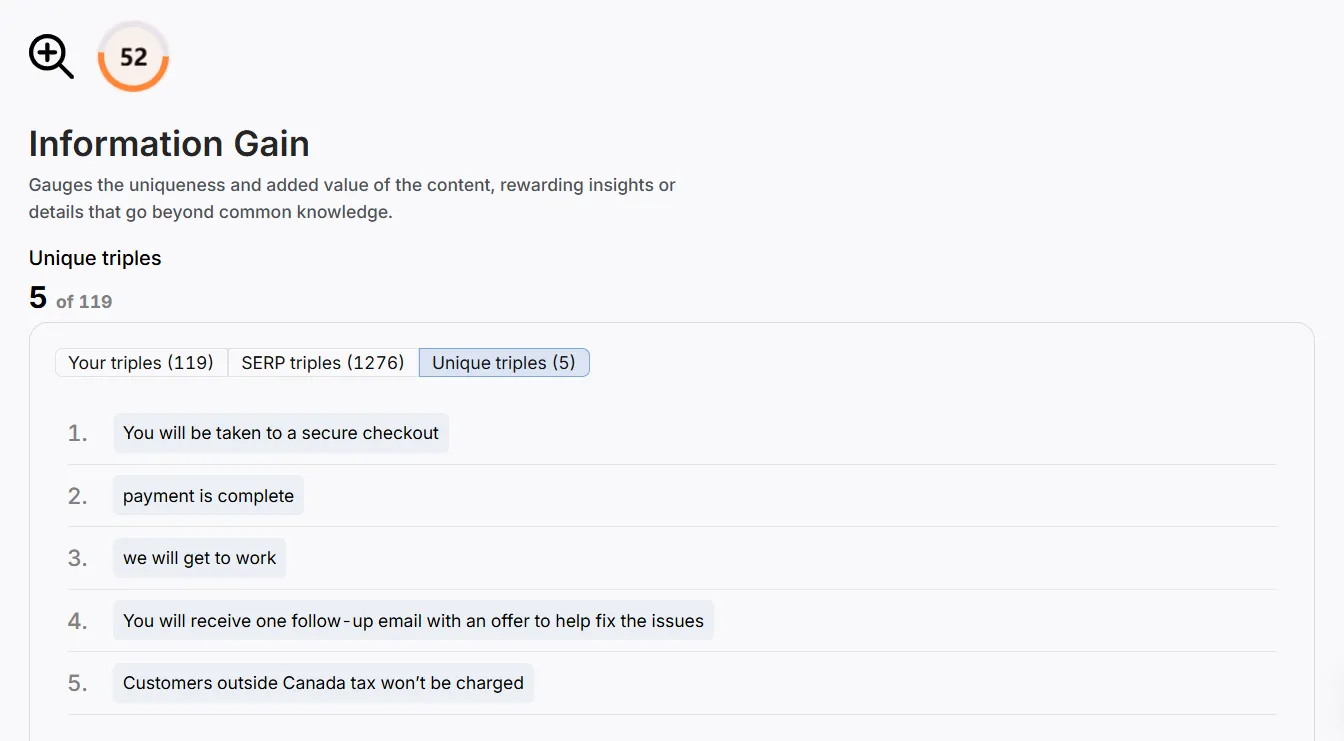

Information Gain

This metric measures the uniqueness of information the page provides. Google and LLMs want something they don’t already know. Sadly, when it comes to our SEO audit landing page, this score falls below average—the number of “unique triplets” is straight-up embarrassing. Honestly, those shouldn’t even get a mention.

But this whole topic makes me question: what does “unique information” actually mean? If you ask me, it’s extremely tough to come up with an original idea. A few genius minds manage it; the rest of us mostly remix and iterate on what already exists in the world. Our thoughts and work are shaped, sometimes completely, by others’ ideas—and we just create new versions of old concepts. That said, everyone has the ability to contribute unique information when they share personal experience. That’s mine to own, and if I keep it honest, that’s where genuine uniqueness comes from.

Frankly, I’ll check this article after publishing—I feel confident it offers something unique because it’s my real, unfiltered perception of Search Atlas audit results. But what about making our SEO audit landing page unique? We haven’t invented radical new techniques; we’re just proud of how we do things and of the quality we provide—not necessarily the novelty. At the end of the day, visitors to our page do gain the information they’re searching for, and if Google thinks it’s not unique—I’m okay with that.

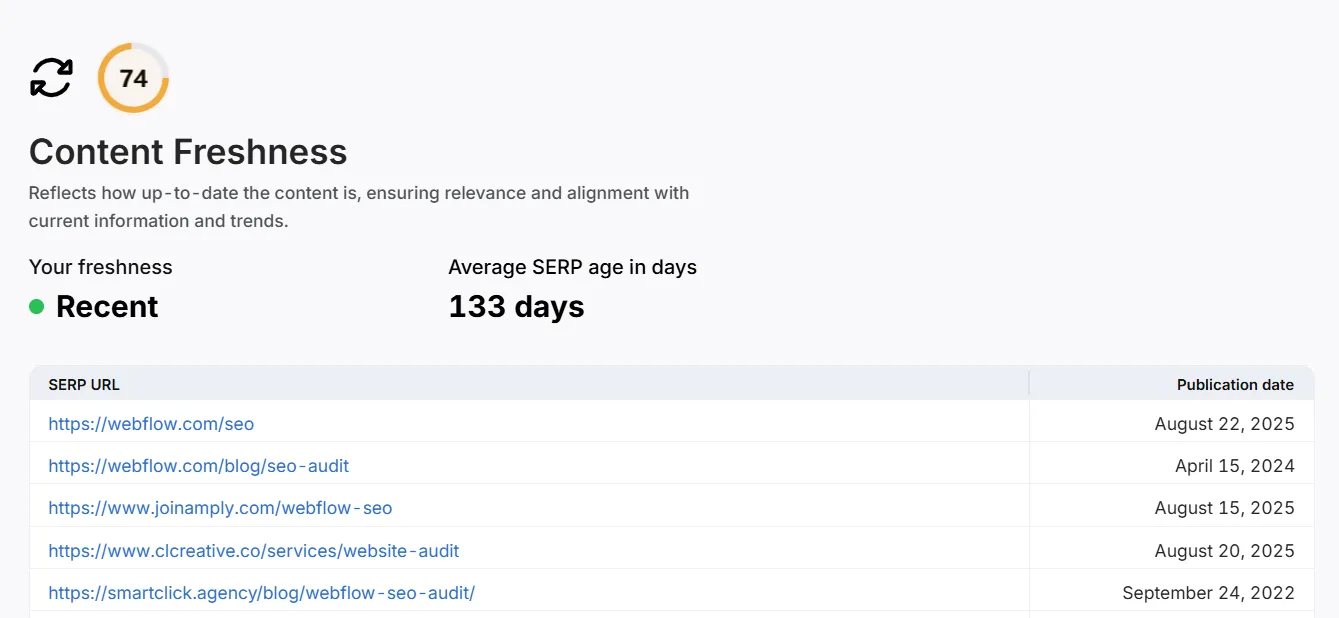

Content Freshness

This metric is tricky. In the past, Google determined freshness mainly by looking at the publish date—and simply republishing a piece could give it a minor boost. But in the age of LLMs, search engines are genuinely hungry for fresh, relevant content. The publish date probably still matters—but if your page is packed with outdated info, LLMs won’t be visiting it and recommending it to anyone (or at least, they shouldn't).

I’m considering an experiment with our Client Research Center—a resource hub where we gathered useful tips on managing and optimizing Webflow sites. Some posts are two or three years old, and frankly, they’ve lost relevance as Webflow’s platform has evolved. My plan is to review and update them all with more relevant information. Would that improve their ranking or citation scores? My hunch is that this tactic can boost page performance. And SEO benefits aside, it will also be a good thing to do to increase value for website visitors.

Essentially, this metric compares your page’s last published date against a collection of competitor pages. That competitor list is impressively thorough, and it’s a good reality check on how diligent your competitors and fellow agencies are about updating their websites.

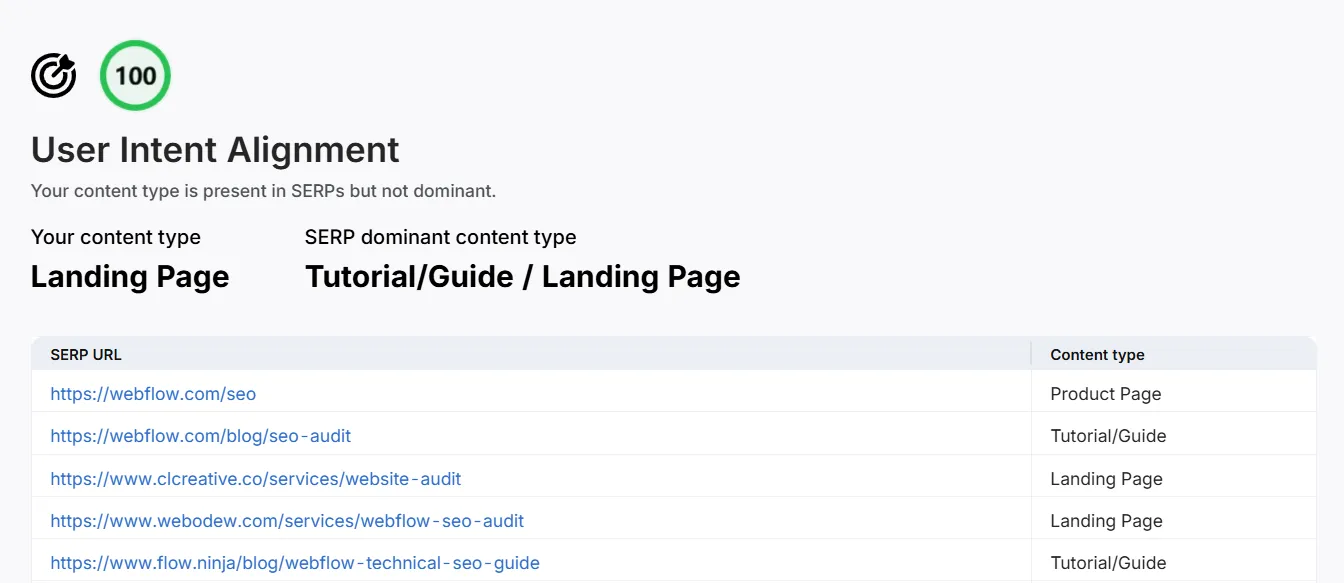

User Intent Alignment

This is the metric where we nailed a perfect score. Search Atlas identifies our content type as a Landing Page, which lines up with high-ranking competitors who mostly have either Landing Pages or Tutorial/Guide pages for the same keyword. Basically, Search Atlas tells me: “Your top-scoring competitors have the same kind of content—and they’re doing great. Kudos for matching the leaders!”

For contrast, when I analyzed Rapid Fire’s homepage for the keyword “Webflow development,” our User Intent score was the lowest (just 10). Our content type there is identified as a Product Page, while the competitors' are landing pages. It makes sense as Search Atlas is comparing our homepage to service pages that actually rank for this keyword. If I analyze our homepage for the keyword “Webflow agency Toronto,” our User Intent Alignment score jumps up to 100. Which again makes perfect sense as our homepage is heavily about Rapid Fire as an agency, not about the services it provides. My verdict would be that this metric is very dependent on the keyword you choose, and scores can swing wildly simply by changing your focus keyword. If you aren’t sure which keywords your page is focused on, the User Intent Alignment score can look confusing or irrelevant.

Entities

Search Atlas starts by pulling a cluster of sub-nodes—related concepts and terms—from the network of the keyword you entered, then checks which ones are present on your page. You don’t have to include every single entity related to your keyword, but having more of them helps your content offer comprehensive coverage of the topic, which signals topical authority to search engines and LLMs.

Our SEO audit landing page scored below average here, with a score of 58 and 78 entities found in total.

To improve this score, as I see it, the best strategy isn’t just to add more text, but to enrich the page with highly relevant content that’s still slightly different from what’s already there. In fact, this metric gave me an idea to add use cases for the audit—just a few examples of who typically orders it and how it benefits them. Since this topic is so interrelated, expanding our examples should naturally bring more entities onto the page and strengthen our authority.

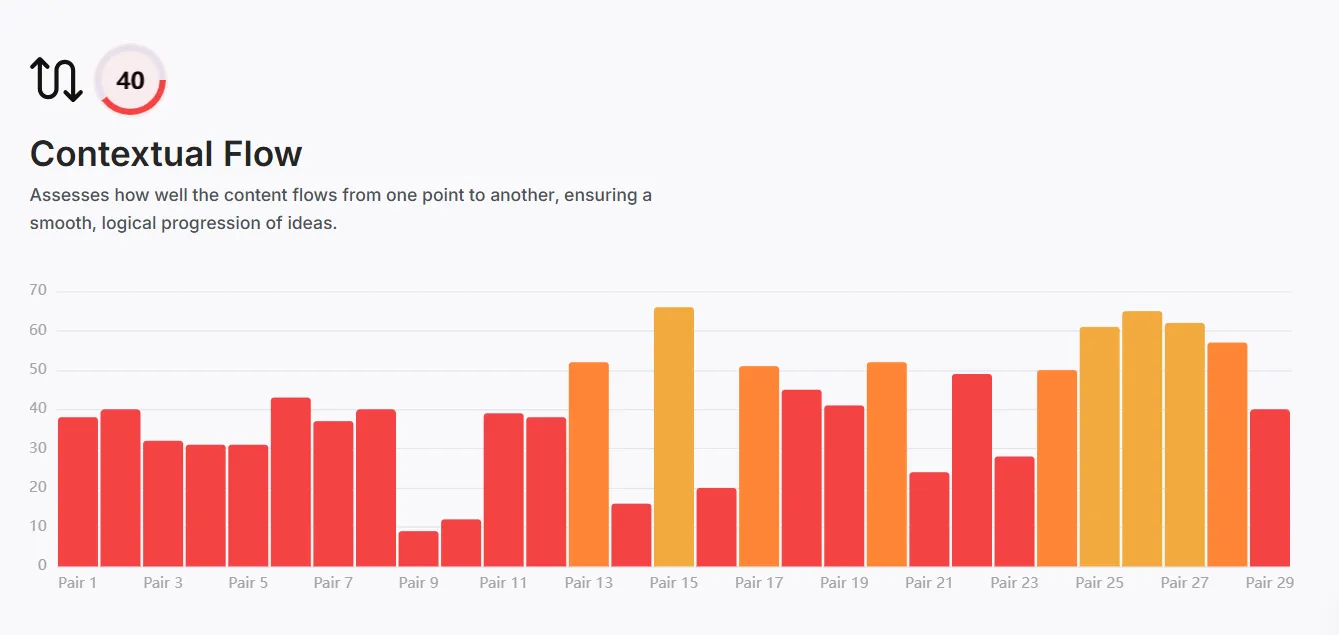

Contextual Flow

This metric looks at how logically the ideas progress through your page by analyzing the sequence of headings. SCHOLAR gave our SEO audit page a pretty low score here (40, Bad). The worst-performing pair was the jump from heading of the 3rd level “A clean build supports growth” to heading of the 2nd level “What you Get.” It’s where we transition from “Why it matters” section to “What you get” section happens. Looking at it now, I clearly see the gap. The sections don’t connect as smoothly as they should.

What’s the takeaway? I need to make sure each section leads into the next, not just stands on its own. Sections should flow together, so the end of one resonates closely with the start of the next.

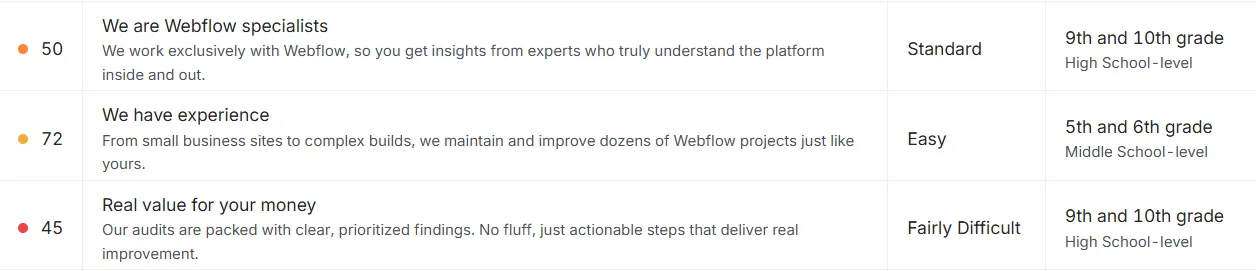

The best-scoring pair was “We are Webflow specialists” and “We have experience,” both heading of the 3rd level in the same section.

Numerical Score

This metric closely corresponds with the numerical triples from the Factuality category. It measures the density of numerical information in your content. Pages that reinforce information with quantifiable insights tend to rank better and communicate more trust. On our page, we have just 14 numbers within a total of 1,749 words, so our density falls between 0.5% and 1%. To reach the green zone for this metric, the proportion should be closer to 2.5%.

Query Relevance

This metric measures the semantic distance between the query (the keyword you’re analyzing) and the primary attributes of the page, which are the title and headings. We’re solidly in the green zone here, with a score of 83. It’s important to remember that this metric can swing depending on which keyword you’re analyzing—your score will vary with your choice of focus keyword.

Readability

This metric measures how easy it is for users to digest the content on your page. The general recommendation is to write at about an 8th-grade reading level—though of course, that varies if your content is aimed at kids or scientific audiences. Our page scores 55 here, which falls into the “Below Average” category, landing us at a middle school (7th–8th grade) reading level. Honestly, I’m happy with that. I don’t think the content needs to be simplified any further.

Main Takeaway

SCHOLAR’s analytics offer valuable insight into your page’s health and performance, measured by the keyword you choose. For my landing page—the one I’m hoping will climb up the search rankings—the overall score isn’t great, and that lines up perfectly with the low traffic I’m actually seeing. Reviewing the SCHOLAR results, I made a few notes to myself on how the page can be improved. My action list right now:

- Add more numerical data

- Include at least one chart or table

- Add testimonials

- Add use cases

- Ensure sections flow logically from one to the next

When I look at this list, it’s striking how these recommendations are exactly what any solid SEO expert would suggest, even without SCHOLAR’s detailed metrics. At the end of the day, it always comes back to the value the page gives users. That means offering the most comprehensive, relevant information—answering every question someone might have. If someone searching for an SEO audit for their Webflow website lands on my page, they should find everything they need, presented in different formats (not just walls of text, but also lists, images, and charts), and in easily digestable language.

When it comes to AEO (Answer Engine Optimization), the same principles apply. LLMs value up-to-date, thorough content just as much as Google does.

Going through SCHOLAR’s scoring gave me a fresh perspective, forcing me to think about aspects I’d previously overlooked. It’s been a reality check and a motivation boost in one shot. So I view the time spent analyzing as well invested—and I hope this article offers real value and some insights to anyone struggling with search rankings.

.webp)